Fiction Tagging Engine: Finding a Data Source

Project Overview

The fiction tagging engine is first step toward creating a story DNA database. The story DNA database will be the engine for a service that works like Spotify or Pandora but for text stories. The tagging engine will be an algorithm trained for general content identification.

Problem Statement

There are many readers who would like to know what content is present (is there graphic violence? transphobia? etc.) in a story. Most fictional text stories are not labeled in any way. My goal is to create an algorithm to generate content tags to provide content warnings and also help with filtering for content a reader wants. The scope of the project is fiction written in English. The intended audiences are any readers who may want to screen their TBR pile based on content.

When I broke down building this engine into smaller steps, I found there isn’t a dataset with the necessary labels that exists. To create a tagging engine based on labeled data, I’d first need to build a dataset of full text fiction stories with labels. With that smaller step in mind, the question became what is the best way to build that dataset?

Possible solutions to the Specific Challenge

- Combine datasets from LibraryThings, GoodReads, and Project Gutenberg

This was what I’d originally pictured but there were a few issues with this. LibraryThings and GoodReads both shut down public access to their APIs earlier this year. Based on the anecdata of what I’ve seen, most books available via Project Gutenberg are more heterosexual cis able-bodied Christian European-descent/white men than I’d like my dataset to be. The stories, the characters, and the authors need to be as diverse as possible to avoid introducing biases into the dataset. - Scrape websites of online fiction magazines and supplement with data from award websites

Online fiction magazines don’t have good tagging, so I’d have to read and tag each story manually. Plus I had difficulty getting permission from the publishers to scrape their websites. (Note: I understand that webscraping is a legal grey area. We are consent-based folk and I’m not scraping someone’s site without their permission.) - Use fan fiction from Archive of Our Own (AO3) into a dataset

This is the option I went with because the data from this source ticks off so many boxes.

The fics are:- publicly published

- full text stories with award-winning tag wrangled tags (labels)

- diverse range of characters and authors

- downloadable

Data Collection and Exploration

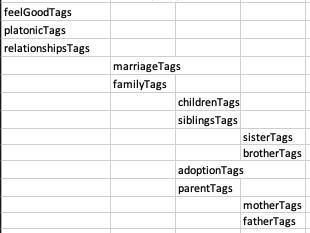

Using AO3 Statistics, a CSV download tool, I collected data on over 1,500 fics. I narrowed the dataset down to only text fic that did not explicitly state to not report/copy without duplicates. I started combing through the tags and putting tags into groups. In doing this I realized I would need to do another data pull. The next time focusing on the length of the fictional. I also limited the fandom sources to ones I know to make grouping tags easier and expanded the media source from only novels to all fictional media.

Informed Collection and Exploration

I downloaded about 1000 fics (HTML format) using AO3 Ebook Download Helper. This first round was for my capstone project for the Data Scientist program from Udacity. After getting the fics downloaded and giving them a cursory look, I’d found the raw data that would work. The next step was setting up a script to process each fic into a row of data in a dataframe. I’ll be discussing that step next week in Fiction Tagging Engine, Step 1.2: Building A Dataset – Building a Script to Make a Clean and Tidy Dataset.