Fiction Tagging Engine: Data Extraction

Data Exploration

The data exploration came in two parts. First was going through the downloaded AO3 HTML files to see how to best load them into a dataframe. The second was the manual processing phase, where I clustered tag into lists. This blog post is about going through the HTML files to build a script to load the data into a dataframe and export the tags for manual processing.

HTML File Data Exploration

The full story text in the HTML files have two main structures: stories with chapters and stories without chapters. Of the 1039 HTML fanfics used in the initial gathering, 97.02% were processed using a Python script. The remaining 2.98% were processed manually instead of choosing to drop the data.

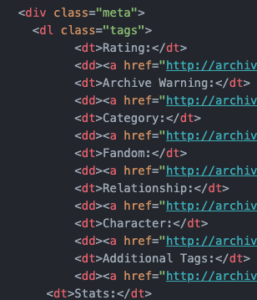

The variability of the file structures made extracting the tags and story text the most difficult parts of the script to get right. To get the tags class data I used a for loop with if/elif/else statements nested within it to grab the necessary data. The most reliable way to grab the story was a combination of for loops and if/elif/else statements. The text was sometimes nested six levels deep for a story without chapters and five levels deep for a story with chapters.

You can get the cleaning and tidying script here: https://github.com/jfrankbryant/ao3ETLpipeline (please note this script needs to be cleaned up and is currently in a Jupyter notebook)

What each step of the script does to extract the data:

- Import necessary libraries

- Pandas

- NumPy

- BeautifulSoup

- os

- SQLAlchemy; create_engine

- Using the os library, we read in the file names in the named directory into a list. The removal code removes filenames of unsuitable files from the list. This will keep those files from being read in. Last we add the directory name to the filenames to allow for automated reading in of files in step 5.

- Set up functions to extract the individual tag data from the fanfic HTML files

- extractStoryRating: if/elif statement nested inside of a for loop to grab the story rating

- extractArchiveWarning: if/elif statement nested inside of a for loop to grab the archive warning

- extractCategory: if/elif statement nested inside of a for loop to grab the story category

- extractFandoms: if/elif statement nested inside of a for loop to grab the story fandom

- extractRelationships: if/elif statement nested inside of a for loop to grab the characters in relationships and their relationship configuration

- extractCharacters: if/elif statement nested inside of a for loop to grab the characters in the story

- extractAddTags: if/elif statement nested inside of a for loop to grab the additional tags

- extractSeries: if/elif statement nested inside of a for loop to grab the series information

- extractCollections: if/elif statement nested inside of a for loop to grab the collection information

- extractPubStats: if/elif statement nested inside of a for loop to grab the publication stats (publish date, chapter count, word count)

- Set up extractTags function to call of all tag class extraction functions

- set all variables being loaded into the dictionary to have a null so that all variables exist

- set tagClass variable to have the value of the file’s tag class

- if/elif statements nested inside of a for loop to grab the tag data and replace the null values

- load the variables into a dictionary and return the dictionary (this is easier than returning all of the variable individually)

- Set up functions to extract other fanfic metadata (author, title, story text, URL, and roll all the information together into a dictionary

- extractAuthor: for loop to extract author name (AO3 username)

- extractTitle: if/elif statement nested inside of a for loop to grab story title

- extractStory: for loop going through the “chapters” element to check for span tags, div tags, and p tags to extract the story text string by string and removes HTML tags from the story text

- extractStoryChapters: for loop going through the “chapters” element to check for “chapter content”, span tags, and p tags to extract the story text string by string

- extractStoryURL: if/elif statement nested inside of a for loop to grab story URL

- createAO3dict: for loop that goes through the file list created in step 2, creating a BeautifulSoup object for each element in the list, calling the previous functions (including extractTags), loading the returned values into a dictionary for the file and then loading the file dictionary into a dictionary for all of the files

- Call createAO3dict function then convert returned value into data frame and convert word count column in dataframe from object type to int type

This creates a dataframe with each row being a file and each column being the data about that file. Converting the word count from an object to a integer is a good check to make sure the the correct information is in the word count column. If there are non-integers in the column, create a dataframe slice where the word count column matches the data in the error message. - Check for blank story text in dataframe and process the data accordingly

- Read in story text manually

- Drop rows with blank text

- If you are going to run this script more than once, any stories that are being dropped, you can add to the remove_fanfics list at the top to help save a little time on future runnings

- Set up listifyTags function to loop through each cell grabbing the data in the specified column at each row, flattening the list of lists into a single list, consolidating the list of to be unique phrases sorted in alphabetical order, and convert the list to a dataframe so each phrase has its own row

- Call the function to create separate files for additional tags, fandoms, category, relationships, and character for manual processing

- Send the additional tags, fandoms, category, relationships, and character dataframes to CSV files to be manually processed

- If you don’t want to process your data again, send the in-process dataframe to a CSV and read it back in when the tags have been manually processed (instead of processing all of the data again)

Depending on how much data you’ve pulled, this may take you a week or several. I’ve included the tag groupings I’ve done thus far, although I looking over my code I’ve found a definite area for improvement with tag processing and will cover that in a few weeks

The rest of the steps I go over in Fiction Tagging Engine: Incorporating Manually Processed Tags.

Data Preprocessing

The preprocessing steps in the ETL pipeline with feature engineering the additionalTags column to have standardized tags and one-hot encoding the standardized tags so a machine learning algorithm can be run on the data later is something I’m proud of, although I understand there are some definite improvements to be made to clean up the script I’ve written.

The process of one-hot encoding the feature engineered additionalTags column also involved a StackOverflow post, which led me to the documentation for Pandas with the handy explode method which allowed me to creates a row of data for each tag so the dataset could be one-hot encoded. I wish there was an implode method, but I did my best with converting ‘0’ to NaNs and then using the groupby method to have each story only have one row in the dataset with all of its tags one-hot encoded.

The dataset requires manual processing because every person thinks and tags differently. Tag standardization produces a processed dataset where similar terms are remapped to have the same label.

Improvement

Keeping track of the different loops within loops was crucial to extracting the data from the HTML files, however it also makes the code harder to read and possibly slower to run. I’d like to try replacing the various letter loops (i, j, k, etc.) with enumerate to keep track of the loops like keep make the code easier to read and also possibly run faster.

Another aspect I would like to improve is having a tag that relates to multiple groups be replaced by all of the group tags for that tag. For example the tag ‘trans gomez addams’ should be replaced by both transTags and addamsFamilyTags. The way the code is setup, right now the tag is replaced by whichever one comes first. A possible solution for this is to have the code search for the tag in dictionary’s values and pull out the keys for those values, add those values to the list for the cell. This will probably make the code run slower, but it would result in better labeling for the dataset.

A third aspect I would like to improve is being able to automate ingestion of all the types of HTML files that can be downloaded from AO3. Right now the extract scripts can process 97.02% of the downloaded fanfics. This would greatly speed up augmenting the dataset, however for such a small percentage of files, it is currently acceptable to either not include them in the dataset or manually process them like I did.

Edits:

7/03: splitting up the instructions for the ETL pipeline to be in two posts instead of one